Negative Data Generative Models

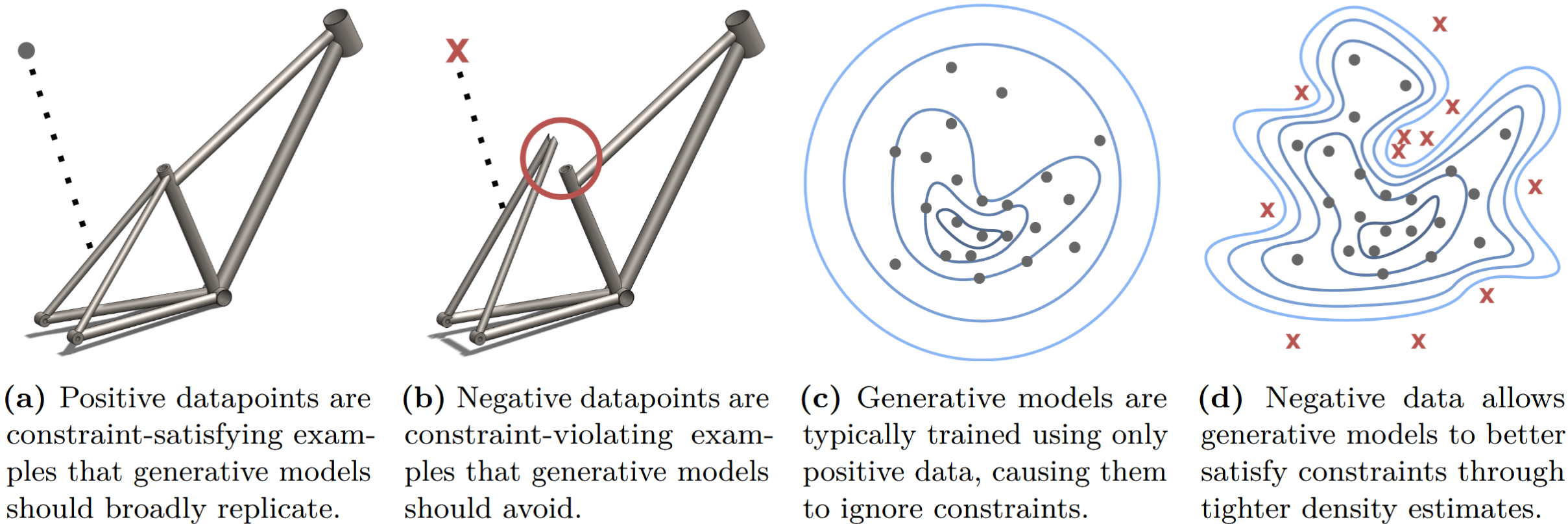

To train Generative AI models, practitioners conventionally show these models many positive examples of what they want the model to generate. In my research, I've proposed a new paradigm that incorporates negative examples, which are examples of what the model should not generate. These negative examples are instrumental in teaching generative models constraints, which are essential for many engineering problems, particularly those with safety-critical requirements. Much like humans learn best from a mixture of positive and negative feedback, generative models can train more efficiently and effectively using negative data in addition to positive data. As a bonus, negative data is often cheaper to generate than positive data, despite often being more information-rich.